Deployment Guide

How to deploy BaseKit on-premise

Production Environment

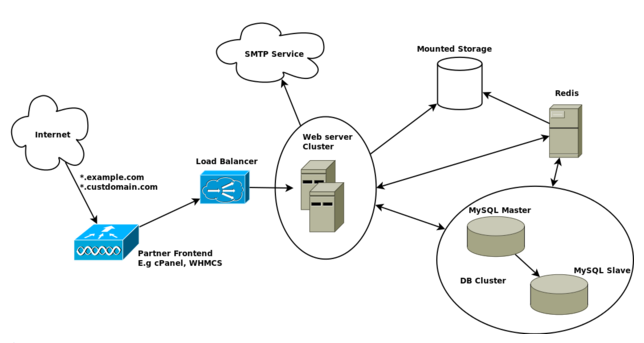

Here's an example of a minimal live/production environment:

Critically this has a web server and database cluster which provides resilience and stability to the service. A load balancer should distribute the traffic to the web servers intelligently - only sending traffic to live nodes.

Load Balancer

The load balancer distributes traffic between the web servers providing scalability and resilience. The choice of load balancer, configuration and maintenance is left entirely to the partner.

Network Connectivity

We expect the following connectivity to be in place over a gigabit ethernet switched network:

| Function | Inbound | Outbound |

|---|---|---|

| Web | TCP port 80 and 443 open to all. SSH access from our hub server (46.137.96.109). | All high ports (to respond to http and https requests). SSH and port 80 access to our hub server (46.137.96.109). Ports 3310, 3311, 3312 and 3313 to the DB servers. Port 6379 to the Redis server. Port 80 access to all web servers. Access to the SMTP service Port 80 access to the load balancer. |

| Redis | TCP port 6379 from the Web servers. SSH access from our hub server (46.137.96.109). | All high ports to the web servers. SSH and port 80 access to our hub server (46.137.96.109). Port 80 access to all web servers. Access to the SMTP service Port 80 access to the load balancer. Ports 3310-3313 to both DBs. |

| Database | TCP port 3310,3311,3312,3313 from the web servers and all DB servers. SSH access from our hub server (46.137.96.109). | All high ports to web servers and DB servers. Port 3310,3311,3312,3313 to all DB servers. SSH and port 80 access to our hub server (46.137.96.109). |

| SMTP | SMTP connections from all web servers. |

Depending on how the environment evolves, we may well require access to be opened up to different servers and ports at different times.

Monitoring

We use Zabbix to monitor live environments. In order to monitor all servers, we will need outbound access on port 10051 to IP address 46.51.192.112.

If monitoring is not required, then these ports need not be open to us.

Staging Environment

The staging environment is to provide a safe environment in which we (BaseKit and the partner) can test upgrades to the code.

In an ideal world the staging environment would be a smaller replica of the live environment, however in practice we would suggest that having a single server capable of running varnish, apache, redis and mysql is fine. This is why the memory and storage are quite high in the minimum specs above.

SMTP Service

The BaseKit application needs to send emails through an SMTP service. These emails are things such as error emails and also mails generated by customers’ websites when using the form widget. For example ‘contact us’ emails, order emails etc.

We can set the application to hit any SMTP service and can authenticate to that service.

The SMTP service must be configured to allow mail from all web servers in the cluster and also to relay mail for a particular ‘from’ address which will need to be defined by the partner.

We leave the choice of SMTP server along with the configuration and maintenance entirely up to the partner.

Storage

There is a need for storage to be shared between all web servers and the Redis server. We leave the choice of this storage to the partner, but something like NFS has proven adequate in the past.

The shared storage should not reside on one of the web servers, but be mountable by us from all web servers and the redis server.

The databases also require storage attached to which has a very fast access rate.

For both web and DB storage we ask that whatever storage solution the partner chooses, it is expandable and has a minimum of 300 random write IOPS, preferably 500 IOPS.

We recommend using RAID 10 setup as LVM for the DB storage.

Partitioning

We ask for roughly the following partitioning layouts:

Databases

50GB root partition (RAID 10)

20GB Swap partition that is separate from the LVM created for the DB data.

500GB RAID10 partition on a separate device

Webs

50GB for the home partition (most of our footprint will be in /home/basekit/).

NTP Service

Please ensure that all servers have access to a time server and are properly synced.

DNS

Each environment commissioned should have a domain. The live domain should point to the live load balancer. The staging domain should point to the staging server.

These domains need to be in place before we commence the hardware installation so that we can immediately use DNS for accurate testing and a proper user experience.

We would expect the partner to be able to register and configure their own domains.

All changes must be made on the DNS servers that the domain is pointing to.

Below are examples of how we recommend DNS should be set up.

Live

Load Balancer IP: 123.123.123.123

Production Domain: example.com

Records required:

example.com A => 123.123.123.123

*.example.com A => 123.123.123.123

Customers would then point their domain to either the environment domain or to the load balancer IP:

custdomain.com A=> 123.123.123.123

*.custdomain.com CNAME => example.com

Staging

Staging Server IP: 234.234.234.234

Staging Domain: staging-example.com

Records required:

staging-example.com A => 234.234.234.234

*.staging-example.com A => 234.234.234.234

As this is a staging environment, there will be no customer domains on this environment although if any test domains are used, they will need to be set up as follows:

testdomain.com A => 234.234.234.234

*.testdoimain.com CNAME => staging-example.com

Assistance

For any queries about infrastructure please feel free to get in touch with:

Andy Waddams (Infrastructure Team Lead): andy@basekit.com

Infrastructure Team: infrastructure@basekit.com

Updated less than a minute ago